Online Disinformation Primer

In 2020, we saw a surge in the spread of two viruses. As the novel COVID-19 virus proliferated across the globe, it was followed by a second virus of online misinformation.

In the vacuum of uncertainty around COVID-19’s origins and treatments, waves of untrue stories, dangerous home remedies, and groundless conspiracy theories rippled across online communities. Consequently, both disinformation and misinformation, enabled by preexisting distrust of political and scientific experts, exacerbated skepticism of global and national health institutions and the vaccine itself. After a contentious presidential election in late 2020, online misinformation further undermined people’s perception of the credibility of the democratic system in the US, culminating in the January 6th attack on Congress. Then-President Donald Trump’s subsequent ban from Facebook and Twitter ignited debate over the role that social media platforms should play in public discourse, and whether safety should be prioritized over free speech. It is clear in 2023 that online misinformation remains prevalent and pervasive, demanding continuous attention and effort to resolve.

Let’s explore divisive digital discourse, some of the common terms related to this debate, and contextualize the issue with insights from some of the 200+ with experts, community leaders, social media employees, users, journalists, policymakers, and more. In doing so, we hope to develop a broader understanding of disinformation for more resilient online discourse in 2023 and beyond.

Disinformation vs Misinformation vs Malinformation

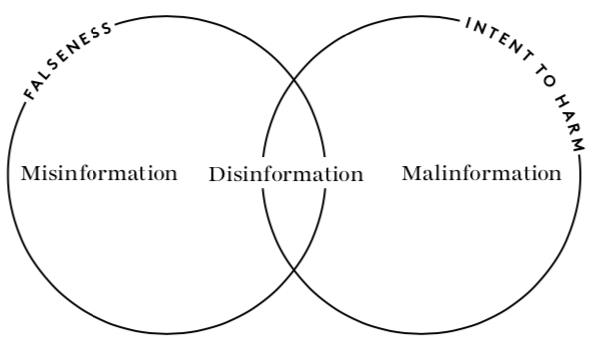

Disinformation is information that is known to be false yet is shared intentionally for nefarious purposes. One example of disinformation is lying about how or when to vote with the designed intent to prevent people from a specific political party or identity group from casting their ballots. This differs from misinformation, which is false information that is unintentionally spread by people who believe it to be true, like when an ordinary user unknowingly shares a quote from a fake doctor or a business spreading falsehoods about Coronavirus treatments. A third type of content, malinformation, refers to any authentic information that is intentionally spread to cause harm. This includes hacked or private materials that are spread to damage their owner’s reputation without their consent.

Simply put, dis/malinformation is intentional; misinformation is unintentional.

These terms are relatively new — they were first defined by Claire Wardle and Hossein Derakhshan in 2017 and have more recently been widely adopted by those studying these phenomena as an emerging academic field. However, dis-, mis-, and malinformation have existed for thousands of years. Documented cases of the political use of disinformation can be traced at least as far back as ancient Rome. And concerningly, the term “misinformation” is increasingly used to describe information someone simply disagrees with, as a tool to moderate or even censor speech.

The internet is not responsible for disinformation; the internet is a platform that augments the reach and vibrancy of disinformation. The way we communicate online unlocks a rich ecosystem where false information can spread faster than ever before (and farther than corrections to misinformation), sometimes leading to real-world harm.

Types of Disinformation

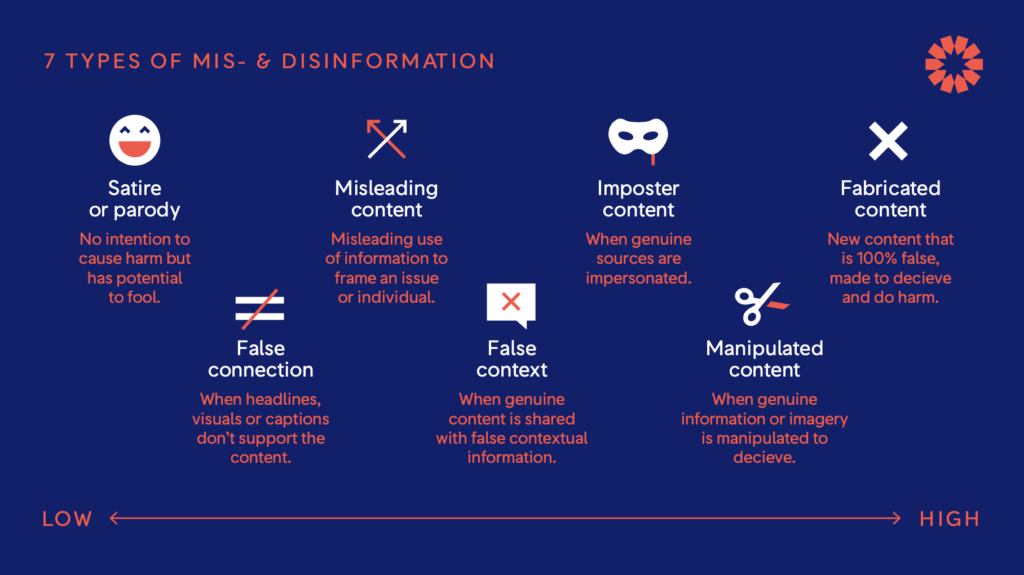

Within the categories of dis-, mis-, and malinformation, there are even more ways of subcategorizing deceptive content. Wardle and Derakhshan break it up into seven types: satire or parody, misleading content, imposter content, fabricated content, false connections, false context, and manipulated content. The European Association for Viewers Interests (EAVI) lists several other examples of content that can be misleading, including clickbait, sponsored content, conspiracy theories, and pseudoscience.

What About Deepfakes?

Deepfakes are a specific type of manipulated content that can be difficult for most people to identify. Deepfakes are images or videos that have been generated using artificial intelligence to make it look like somebody is doing or saying something that never actually happened. Alternatively, a “shallow fake” or “cheap fake” is a piece of content that has been similarly manipulated using more traditional techniques, like selectively editing a video to remove key context or slowing down a clip to make it look like someone is drunk or slurring their speech.

Deepfakes (and cheap fakes) have been used and could soon be deployed as political tools to manipulate narratives and trick governments or citizens into believing false narratives. In March 2022, near the beginning of the Russian invasion of Ukraine, purporting to show Ukrainian President Volodymyr Zelensky advising Ukrainians to lay down their arms against Russia and return to their families. Experts quickly identified the video as a deepfake, but it made the rounds online before being banned from some social media sites. Furthermore, generative AI tools are making it quick, easy, and cheap to create fake videos, audio, and text.

Norton Security advises consumers to look for key signs that a video is a deepfake, like unnatural eye movements or awkward facial-feature positioning, but as deepfake technology improves, it will become even harder to identify manipulated content like deepfakes with the naked eye.

Where Does Today's Disinformation Come From?

After the U.S. presidential election in 2016, the presence of foreign disinformation in American social media feeds was exposed. Through the Internet Research Agency, Moscow promoted false and divisive content across American media channels for months leading up to the November election. Using personalized messaging, the information campaign targeted people from a range of identity groups, including liberals, conservatives, progressives, LGBTQ+ people, African Americans, Native Americans, Muslims, Christians, Black Lives Matter supporters, Blue Lives Matter supporters, gun enthusiasts, Texas successionists, and more. Instead of advocating one viewpoint, this barrage of Russian disinformation and misinformation across so many identity groups was meant to confuse and divide Americans by amplifying polarizing or untrue narratives.

To add even more complexity to this issue, money-seekers outside the US have capitalized on US-centric fake news stories by hosting them on their websites where they can then rake in hundreds of dollars per story in ad revenue after the articles go viral.

Since 2016, the main source of disinformation online shifted from foreign governments to political or entrepreneurial actors. Due in part to increased public awareness and proactive steps by social media sites and the US government to limit foreign influence operations, the amount of foreign disinformation during the 2020 US presidential election was lower than it was in 2016, despite seeing a multitude of disinformation campaigns from an increased number of countries. Instead, 2020 brought a slew of domestic disinformation from radical partisans or those looking to make a quick buck. Some pundits have spread false content because it allows them to sell more ads and products. Some political elites have embraced conspiracy theories, driving them into the mainstream. Other users capitalized on some of the techniques that Russia used in 2016 to spread lies that favor their own political opinions. Around the world, companies specialize in spreading disinformation for whoever is willing to pay them, making it even harder to determine its true source. In the US, scholars have identified how certain media strategies leverage the label of disinformation to reinforce existing power structures at the expense of marginalized communities.

Disinformation is a problem that is not going away anytime soon. As a result, we hope this primer helps conceptualize different types and sources of disinformation, as a deep understanding of the problem is precisely what our world needs to minimize future damage online.

The Convergence Dialogue on Digital Discourse for a Thriving Democracy and Resilient Communities is a project of Convergence Center for Policy Resolution, a 501(c)(3) bridgebuilding organization that specializes in spurring action on divisive issues through expert collaboration across divides. For more information on the Dialogue on Digital Discourse, visit the project page here. To learn more about funding the project, please contact Anjali Singh Code at [email protected].