Design

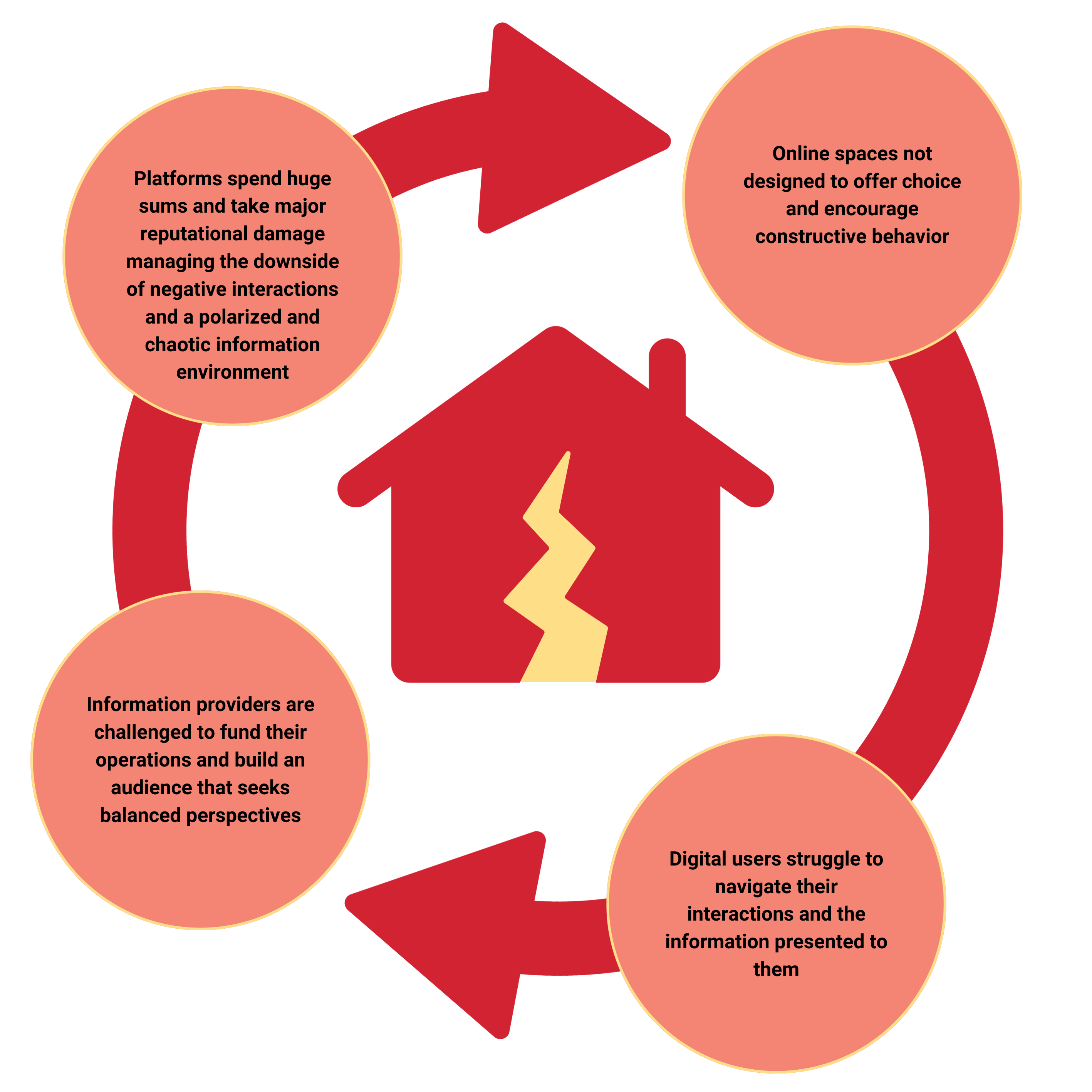

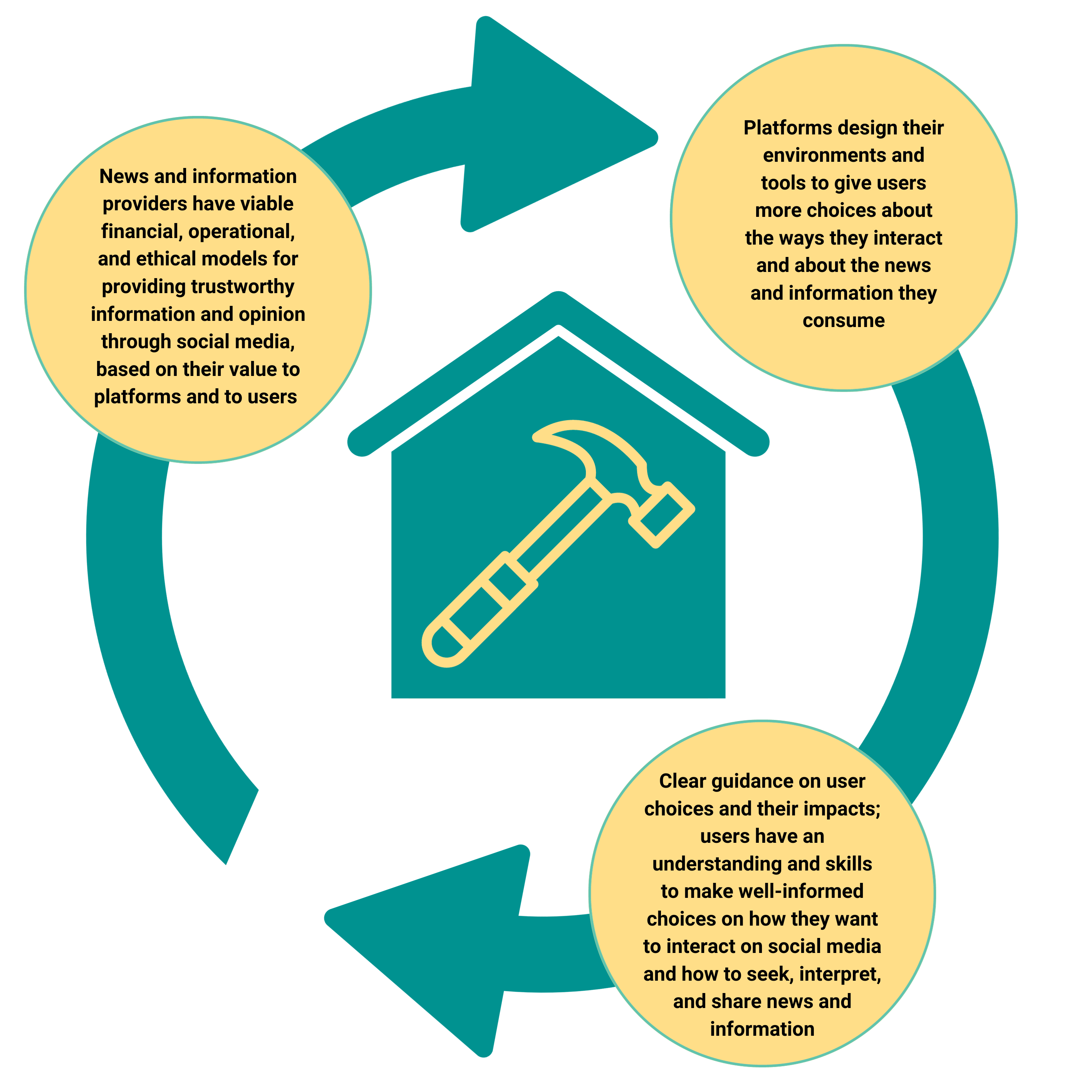

The first pillar centers on how profoundly design influences user behavior and wellbeing, information flow, and discourse dynamics. We must create and continuously improve social media platforms and tools that empower users while mitigating digital harms and protecting free speech. Designing platforms for user agency can foster a sense of ownership and autonomy, enhance intrinsic motivation for responsible engagement, and make users aware of the choices they can make and the likely impact of their choices on themselves and others.